The ChatGPT API is now available! You can now use the API in your applications.

Last Modified : 02 Mar, 2023 / Created : 02 Mar, 2023ChatGPT has announced the release of their ChatGPT API on their official blog.

Many people have been eagerly waiting for the release of ChatGPT's API, and now developers and those familiar with using APIs can easily utilize ChatGPT in their applications.

In summary, OpenAI has announced the release of a new API that integrates ChatGPT into applications, and this API allows for voice recognition and offers cost savings of over 90% compared to fees before December 2022. The ChatGPT API uses the gpt-3.5-turbo model and pricing is around $0.002 per token, with plans to use other models in the future.

The blog post also mentions the release of the stable gpt-3.5-turbo model planned for April, and the Whisper API which offers improved performance using the open-source Whisper tool.

Below is the content that I tested simply using Curl. There is an example of using the openai package with Python in the blog. If you use Python as shown below, it seems to be even easier to use. The content is the same as the example in the blog.

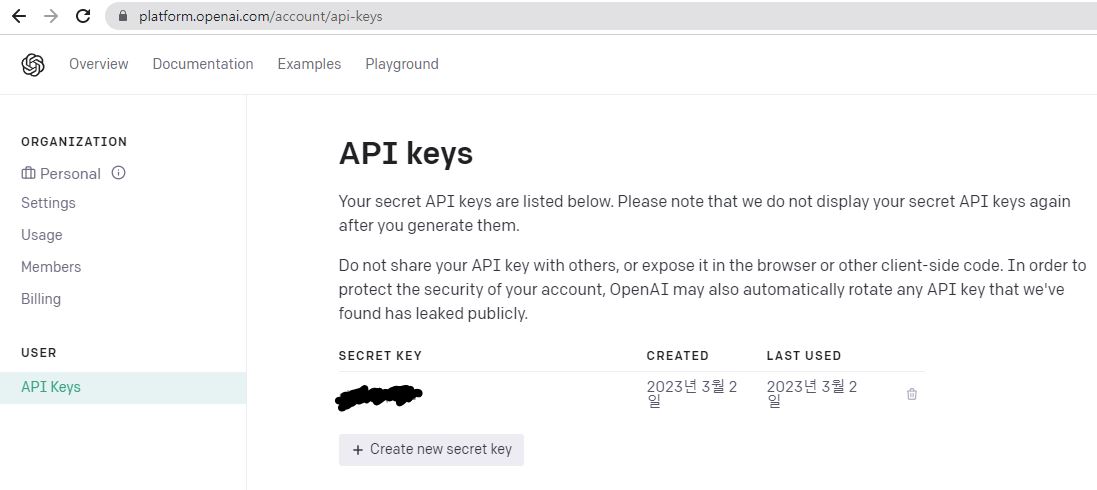

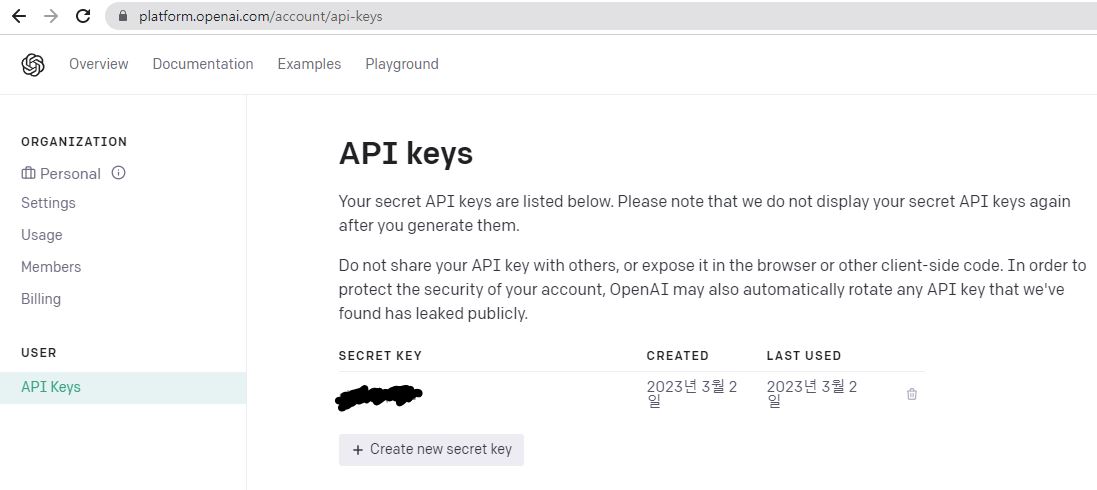

To use the ChatGPT API, you first need an API key required for authentication. The API key is also available for free users. However, the API can only be used until the expiration of the free trial usage, after which a paid subscription will be required.

The issued key must be well recorded.

Screenshot) API Issuance Page Screen

Screenshot) API Issuance Page Screen

Now, I tried sending a curl command with the received key as follows.

You can put the token in the header and leave a message in the body with curl. Here's what I asked:

I requested a translation and received a response that it was translated. It was from webisfree.com, not wezfree.com, but I was able to easily get the desired result anyway.

I didn't fully understand the token criteria, but if it's 0.002 dollars per 1000 tokens, it doesn't seem like an excessive price. It should be okay if used appropriately, but if there are too many requests, it's unclear. It would be better to set a limitation after a paid conversion for stability. This would prevent past charges from being incurred.

So far, we've learned about the new ChatGPT API news.

Many people have been eagerly waiting for the release of ChatGPT's API, and now developers and those familiar with using APIs can easily utilize ChatGPT in their applications.

In summary, OpenAI has announced the release of a new API that integrates ChatGPT into applications, and this API allows for voice recognition and offers cost savings of over 90% compared to fees before December 2022. The ChatGPT API uses the gpt-3.5-turbo model and pricing is around $0.002 per token, with plans to use other models in the future.

The blog post also mentions the release of the stable gpt-3.5-turbo model planned for April, and the Whisper API which offers improved performance using the open-source Whisper tool.

# ChatGPT API Tutorial, How to Use

Below is the content that I tested simply using Curl. There is an example of using the openai package with Python in the blog. If you use Python as shown below, it seems to be even easier to use. The content is the same as the example in the blog.

import openai

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Tell the world about the ChatGPT API in the style of a pirate."}]

)

print(completion)

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Tell the world about the ChatGPT API in the style of a pirate."}]

)

print(completion)

To use the ChatGPT API, you first need an API key required for authentication. The API key is also available for free users. However, the API can only be used until the expiration of the free trial usage, after which a paid subscription will be required.

- Login to openAI

- To get the key, go to the page https://platform.openai.com/account/api-keys

- + Create new secret Click and get the key.

The issued key must be well recorded.

Screenshot) API Issuance Page Screen

Screenshot) API Issuance Page ScreenNow, I tried sending a curl command with the received key as follows.

$ curl https://api.openai.com/v1/chat/completions -H "Authorization: Bearer $myToken" -H "Content-Type: application/json" -d '{ "model": "gpt-3.5-turbo", "messages": [{"role": "user", "content": "Translate next in Korean: Hello. We are WEBISFREE.com!"}] }'

// Response message

{"id":"chatcmpl-xxxxxxxxxxxxxxxxxxxxxxxxxxxx","object":"chat.completion","created":1677754425,"model":"gpt-3.5-turbo-0301","usage":{"prompt_tokens":21,"completion_tokens":22,"total_tokens":43},"choices":[{"message":{"role":"assistant","content":"\n\n안녕하세요. 웨비스프리 닷컴입니다!"},"finish_reason":"stop","index":0}]}

// Response message

{"id":"chatcmpl-xxxxxxxxxxxxxxxxxxxxxxxxxxxx","object":"chat.completion","created":1677754425,"model":"gpt-3.5-turbo-0301","usage":{"prompt_tokens":21,"completion_tokens":22,"total_tokens":43},"choices":[{"message":{"role":"assistant","content":"\n\n안녕하세요. 웨비스프리 닷컴입니다!"},"finish_reason":"stop","index":0}]}

You can put the token in the header and leave a message in the body with curl. Here's what I asked:

"Translate next in Korean: Hello. We are WEBISFREE.com!"

// Response message

"안녕하세요. 웨비스프리 닷컴입니다!"

// Response message

"안녕하세요. 웨비스프리 닷컴입니다!"

I requested a translation and received a response that it was translated. It was from webisfree.com, not wezfree.com, but I was able to easily get the desired result anyway.

I didn't fully understand the token criteria, but if it's 0.002 dollars per 1000 tokens, it doesn't seem like an excessive price. It should be okay if used appropriately, but if there are too many requests, it's unclear. It would be better to set a limitation after a paid conversion for stability. This would prevent past charges from being incurred.

So far, we've learned about the new ChatGPT API news.

Perhaps you're looking for the following text as well?

What were the results when I asked ChatGPT about WEBISFREE branding and copy?

What were the results when I asked ChatGPT about WEBISFREE branding and copy? Why Can't I Use ChatGPT Beta Features? What's the Reason?

Why Can't I Use ChatGPT Beta Features? What's the Reason? Using DALL-E 3 Directly in ChatGPT for Image Creation

Using DALL-E 3 Directly in ChatGPT for Image Creation ChatGPT Image Upload Feature Review

ChatGPT Image Upload Feature Review Added feature to turn off ChatGPT chat history and its usage for model training

Added feature to turn off ChatGPT chat history and its usage for model training Learn how to chat with ChatGPT and listen through the microphone and speaker

Learn how to chat with ChatGPT and listen through the microphone and speaker Starting ChatGPT Plus subscription service.

Starting ChatGPT Plus subscription service. ChatGPT recommends tips and information for usage.

ChatGPT recommends tips and information for usage.